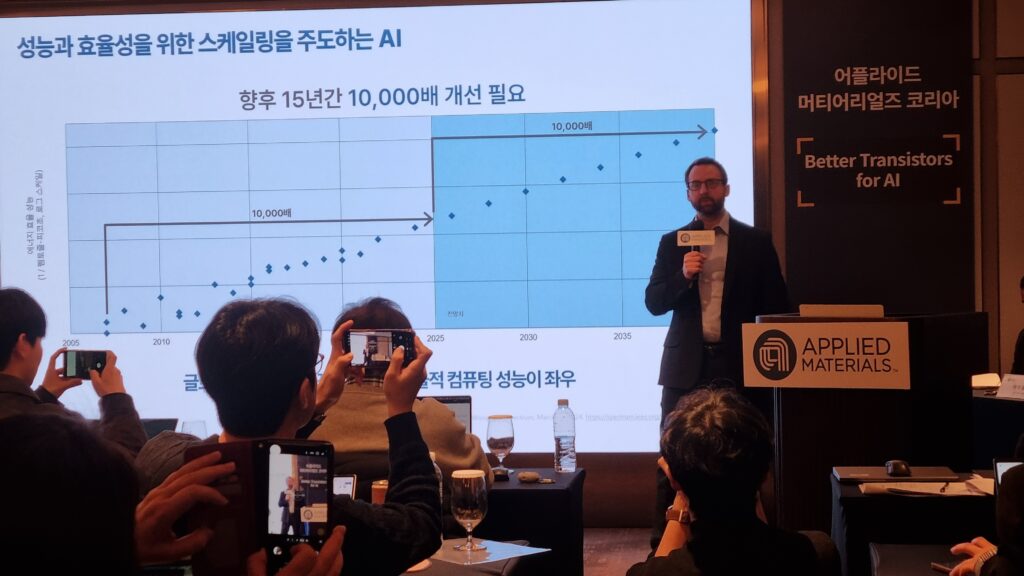

A vision AI software company Neurala announced the launch of its AI explainability technology, purpose-built for applications in industrial and manufacturing. The new feature helps manufacturers improve quality inspections by accurately identifying objects in an image that are causing a particular problem or present an anomaly.

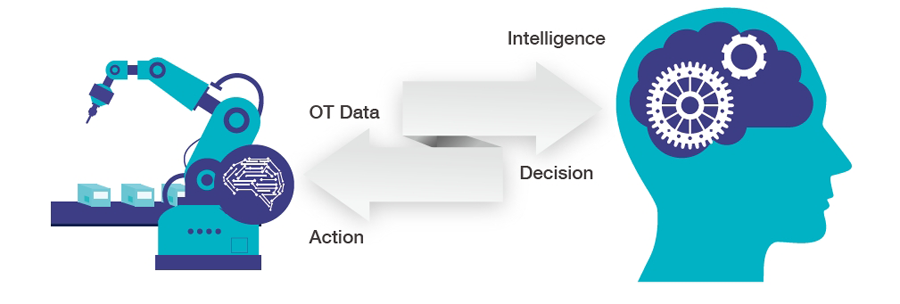

“Explainability is widely recognized as a key feature for AI systems, especially when it comes to identifying bias or ethical issues. But this capability has immense potential and value in industrial use cases as well, where manufacturers demand not only accurate AI, but also need to understand why a particular decision was made,” said Max Versace, CEO and co-founder of Neurala. “We’re excited to launch this new technology to empower manufacturers to do more with the massive amounts of data collected by IIoT systems, and act with the precision required to meet the demands of the Industry 4.0 era.”

Neurala’s explainability technology was built to address the digitization challenges of Industry 4.0. Industrial IoT systems are constantly collecting massive amounts of anomaly data – in the form of images – that are used in the quality inspection process. With the introduction of Neurala’s explainability feature, manufacturers can derive more actionable insights from these datasets, identifying whether an image truly is anomalous, or if the error is a false-positive resulting from other conditions in the environment, such as lighting.

This gives manufacturers a more precise understanding of what went wrong, and where, in the production process, and allows them to take proper action – whether to fix an issue in the production flow or improve image quality.

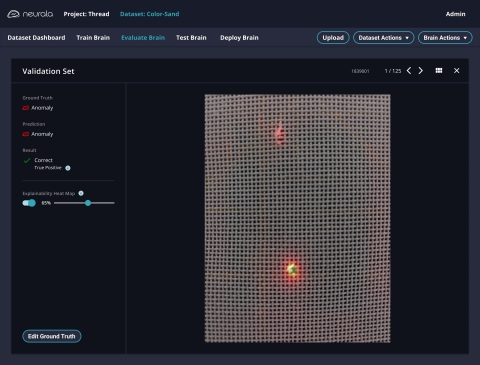

Manufacturers can utilize Neurala’s explainability feature with either Classification or Anomaly Recognition models. Explainability highlights the area of an image causing the vision AI model to make a specific decision about a defect. In the case of Classification, this includes making a specific class decision on how to categorize an object; or in the case of Anomaly Recognition, it reveals whether an object is normal or anomalous. Armed with this detailed understanding of the workings of the AI model and its decision-making, manufacturers will be able to build better performing models that continuously improve processes and efficiencies.

Explainability is now available as part of Neurala’s cloud solution, Brain Builder, and will soon be available with Neurala’s on-premise software, VIA (Vision Inspection Automation). The technology is simple to implement, supporting Neurala’s mission to make AI accessible to all manufacturers, regardless of their level of expertise or familiarity with AI. With no custom code required, anyone can leverage explainability to gain a deeper understanding of image features that matter for vision AI applications.

![[해설] ST, NXP MEMS 사업 인수 완료… “자동차·산업용 센서 시장 싹쓸이 나선다” [해설] ST, NXP MEMS 사업 인수 완료… “자동차·산업용 센서 시장 싹쓸이 나선다”](https://icnweb.kr/wp-content/uploads/2026/02/MEMS_NXP.png)

![[심층분석] AI 데이터센터가 삼킨 메모리 시장, ‘슈퍼사이클’ 넘어 ‘구조적 격변’ 시작됐다 [심층분석] AI 데이터센터가 삼킨 메모리 시장, ‘슈퍼사이클’ 넘어 ‘구조적 격변’ 시작됐다](https://icnweb.kr/wp-content/uploads/2026/01/memory-market-3player-1024web.png)

![[심층기획] 클라우드를 넘어 ‘현장’으로… 인텔, 산업용 엣지 AI의 판을 흔들다 [심층기획] 클라우드를 넘어 ‘현장’으로… 인텔, 산업용 엣지 AI의 판을 흔들다](https://icnweb.kr/wp-content/uploads/2026/01/Perplexity-image-Edge-AI-industry1b-700web.png)

![[피플] “생성형 AI 넘어 ‘피지컬 AI’의 시대로… 2026 하노버메세, 제조 혁신의 해법 제시” [피플] “생성형 AI 넘어 ‘피지컬 AI’의 시대로… 2026 하노버메세, 제조 혁신의 해법 제시”](https://icnweb.kr/wp-content/uploads/2026/02/R41_0775-HM26-von-press-900web.png)

![[이슈] 스마트 제조의 방패 ‘IEC 62443’, 글로벌 산업 보안의 표준으로 우뚝 [이슈] 스마트 제조의 방패 ‘IEC 62443’, 글로벌 산업 보안의 표준으로 우뚝](https://icnweb.kr/wp-content/uploads/2025/07/OT-security-at-automotive-by-Gemini-Veo-1024x582.png)

![[기자칼럼] 제어반의 다이어트, ‘워크로드 컨버전스’가 답이다… 엔지니어를 위한 실전 팁 7가지 [기자칼럼] 제어반의 다이어트, ‘워크로드 컨버전스’가 답이다… 엔지니어를 위한 실전 팁 7가지](https://icnweb.kr/wp-content/uploads/2026/01/generated-edge-AI-4-in-1-01-1024web.png)

![[그래프] 국회의원 선거 결과 정당별 의석수 (19대-22대) 대한민국 국회의원 선거 결과(정당별 의석 수)](https://icnweb.kr/wp-content/uploads/2025/04/main-image-vote-flo-web-2-324x160.jpg)