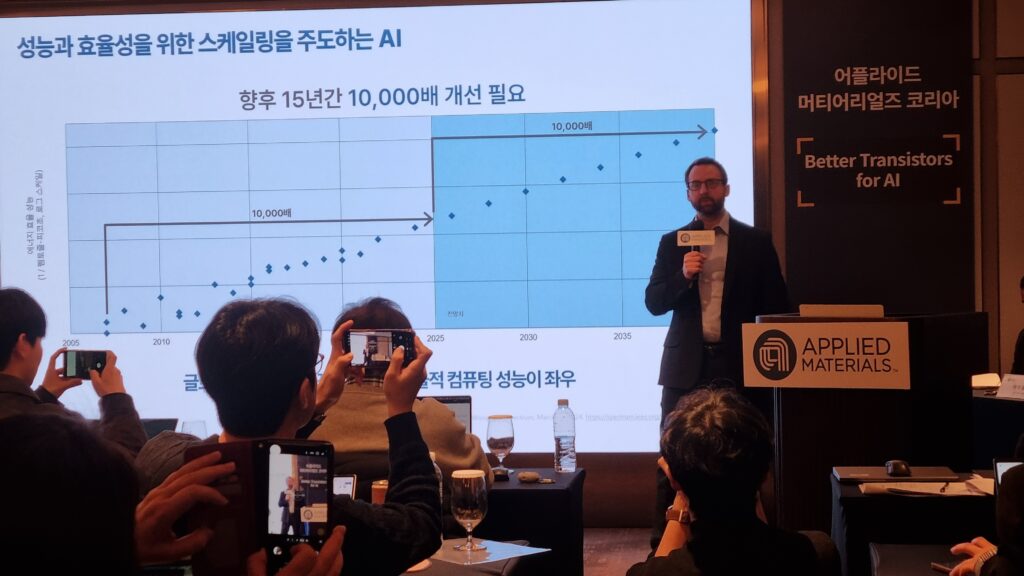

By Ron Wilson, Editor-in-Chief, Altera Corporation

Automotive driver assist systems (ADAS) are the hot topic today in automotive electronics. The systems range from passive safety systems that monitor lane exits, active safety systems like advanced cruise control, to, in the future, situation-aware collision-avoidance systems. The increasing demands ADAS evolution places on data transport and computing are fundamentally changing automotive electronics architectures. And it is becoming clear that these changes foreshadow the future for many other kinds of embedded systems.

Goals and Requirements

Today vehicle-safety electronic systems are isolated functions that control a specific variable in response to a specific set of inputs. An air-bag controller, for example, detonates its explosive charge when an accelerometer output trips a limit comparator. A traction-control system applies a brake to reduce torque on a wheel when the data stream from a shaft encoder indicates sudden acceleration. While these systems make contributions to vehicle safety, they can also act inappropriately because their inputs give them a very narrow view of the world. Hitting a pot-hole or bumping into a car while parking can fire an air bag. A rough road can puzzle traction control.

All that is about to change, according to Steve Ohr, semiconductor research director at Gartner. “Advanced air-bag controllers have multiple sensors that literally vote on whether a crash is happening,” Ohr explained in introduction to his panel at the GlobalPress Summit in Santa Cruz, California, on April 24. “In the near future, the controllers will consult sensors that monitor passengers and cargo to identify how best to deploy the various air bags during a crash.”

At this point, the air-bag controller has crossed a critical threshold: from responding to an input to maintaining—and responding to—a dynamic model of the vehicle. This change, Ohr emphasized, is being echoed in other systems throughout the vehicle, with profound consequences. “We see the same pattern in safety systems such as lane-exit monitors and impending-hazard detectors,” Ohr stated. “Each system is getting more intelligence, moving to sensor integration and then to sensor fusion.”

This evolution is happening in an already astoundingly complex environment. Panelist Frank Schirrmeister, senior director of product marketing at Cadence Design Systems, observed “In 2010, a high-end car could have 750 CPUs, performing 2,000 different functions, and requiring one billion lines of code.” Schirrmeister said that this degree of complexity was forcing developers to adopt hardware-independent platforms such as Automotive Open System Architecture (AUTOSAR), and integrated mechanical-electrical-software development suites. In this fog of complexity, system designers are struggling to cope with a sudden surge of change in the way the systems handle data.

Isolation to Fusion

Hazard-avoidance systems offer a microcosm of this sweeping changes, according to panelist Brian Jentz, automotive business-unit director at Altera Corporation. Today, relatively simple systems like back-up cameras can already have significant processing requirements, Jentz said. “Inexpensive cameras need fish-eye correction to fix the perspective so drivers can interpret the display easily.” These cameras also need compensation to produce useful images in low light, and often they will require automated object recognition. These functions can be done better in the camera, but it’s often cheaper to do them in the central engine control unit (ECU). “Cameras are moving to high-definition,” Jentz continued, “and this can mean megapixels per frame. If you are sending images to the ECU, you may have to compress the data before it leaves the camera.”

Further evolution will complicate the data transport problem further. Hazard detection will move from simply showing an image from a rear-facing camera to modeling the whole dynamic environment surrounding the car. At this point the system must stitch together images from multiple cameras—at least eight for a 360-degree view with range and velocity detection, as shown in Figure 1. A central processor is absolutely necessary, and the ADAS must transport many streams of compressed video to the ECU concurrently.

Figure 1. Placement and use of cameras determines the algorithms required to process the images.

But things get harder still. Video cameras are hampered by darkness and disabled by rain, snow, road spray, and other sorts of optical interference. So designers team the video cameras with directed-beam, millimeter-wave radar to improve reliability in low-visibility conditions. Now the ECU must fuse the video data with the very different radar signal in order to interpret its surroundings. This fusion will probably be done using a system-estimation technique called a Kalman filter.

Kalman and its Discontents

A Kalman filter can take in multiple streams of noisy data from different sorts of sensors and combine them into a single, less-noisy model of the system under observation. It does this, roughly speaking, by maintaining three internal data sets: a current estimate of the state of the system, a “dead reckoning” model—usually based on physics—for predicting the next state of the system, and a table rating the credibility of each input. On each cycle, the Kalman filter assembles the sensor data and uses it to create a provisional estimate of the system state: for example, the locations and velocities of the objects surrounding your car. Simultaneously, the filter creates a second estimate by applying the dead-reckoning model to the previous state: the other cars should have moved to here, here, and here, the pedestrian should have walked that far, and the trees should have stayed where they were. Next, the filter compares the two state estimates, and taking into account the credibility ratings of the inputs, updates the previous state with a new best estimate: here’s where I think everything is really. Finally, the Kalman filter sends the new state estimate to the analysis software so it can be evaluated for potential hazards, and it updates its sensor-credibility table to make note of any questionable inputs.

The good news is that the Kalman filter can assemble a stable and accurate model of the outside world despite intermittent readings, high noise levels, and a mix of very different kinds of sensor data. But there are issues, too. Kalman filters working with high-definition (HD) video inputs can consume huge amounts of computing power, and the analytic routines they enable can take far more, as suggested in Figure 2. “Algorithm development is already ahead of silicon performance,” Jentz noted. “There is basically an unlimited demand for performance.”

Figure 2. Sensor fusion concentrates many heavy algorithms and network terminations on one chip.

There is another issue with important system implications. While Kalman filters are inherently tolerant of noise, they cannot be immune to it. And variations in the latency between the sensors and the ECU—particularly if the variation is large enough for samples to arrive out of order—appear as noise. Such latency variations can cause the filter to reduce its reliance on some sensors, or to ignore altogether information that could have made a vital difference.

This is important because of trends in vehicle network architectures. Purpose-built control networks such as the controller-area network (CAN) or the perhaps-emerging FlexRay network can limit jitter and guarantee delivery of packets carrying some sensor data, although they may lack the bandwidth for even compressed HD video. In principle, system designers could calculate the bandwidth they need for a given maximum jitter, and then provision the system with enough network links to meet the need, even if that resulted in dedicated CAN segments for each camera and radar receiver. But in practice, automotive manufacturers are headed in a different direction: cost control.

“The direction is Ethernet everywhere in the car,” argued panelist Ali Abaye, senior director of product marketing at Broadcom. Abaye said that as the number of sensors increases, cost-averse manufacturers—including the high-end brands—are trying to collapse all their various control, data, and media networks onto a single twisted-pair Ethernet running at 100 Mbits or 1 Gbit.

But a shared network raises the latency issue again. Because Ethernet creates delivery uncertainties, some sort of synchronizing protocol—IEEE 1588, Time-Triggered Protocol (TTP), or Audio Video Bridging (AVB)—would appear necessary. “This is still an active discussion,” Schirrmeister said. “The existing protocols are not yet sufficient for everything these systems need to do.” Abaye, who has 100 Mbit transceivers to sell, is more confident. “Our opinion is that the AVB protocol is sufficient,” he stated.

These debates will have system implications well beyond the cost of cabling. Gigabit Ethernet implies silicon at advanced process nodes, where issues like cost, availability, and soft-error rates become questions. Synchronizing protocols are not exactly light-weight, implying the need for more powerful network adapters. And the need to store and possibly reorder frames of time-stamped data from many sensors could impact memory footprints.

A Multibody Problem

As a final point, when you put radar or scanning lasers into the ADAS architecture, you get a fascinating side-effect. The ADAS on nearby vehicles can now interact with each other. This could lead to sensor interference, or even to an unstable multivehicle system in which two cars hazard-avoid right into each other. This is not a whimsical question: there are hazard-avoidance algorithms that, when used by multiple vehicles in the same traffic stream, are known to lead inevitably to crashes.

“There has already been some research into the behavior of multi-ADAS systems,” Schirrmeister said. “It is an area of continuing interest.”

Such questions will almost certainly involve regulatory agencies in North America and the European Union in the design of ADAS algorithms at some level. Schirrmeister speculated that in developing countries, where cities can spring up and create all-new infrastructure as they go, there may be a move to coordinate ADAS evolution with the development of smart highways.

In any case, it is clear that verification of these systems will involve a significant degree of full-system, and perhaps multisystem, modeling. These will be huge tasks, going well beyond the experience of most system-design teams outside the military-aerospace community.

We have traced the evolution of one automotive system, ADAS, from a set of isolated control loops to a centralized sensor-fusing system. Other systems in the car will follow the same evolutionary path. Then the systems will begin to merge: ADAS, for example, working with the engine-control and traction systems can bypass the driver altogether and maneuver the car away from trouble. The endpoint is an autonomous vehicle—and a network of intelligent control systems of stunning complexity built around a centralized model of the outside world.

![[해설] ST, NXP MEMS 사업 인수 완료… “자동차·산업용 센서 시장 싹쓸이 나선다” [해설] ST, NXP MEMS 사업 인수 완료… “자동차·산업용 센서 시장 싹쓸이 나선다”](https://icnweb.kr/wp-content/uploads/2026/02/MEMS_NXP.png)

![[심층분석] AI 데이터센터가 삼킨 메모리 시장, ‘슈퍼사이클’ 넘어 ‘구조적 격변’ 시작됐다 [심층분석] AI 데이터센터가 삼킨 메모리 시장, ‘슈퍼사이클’ 넘어 ‘구조적 격변’ 시작됐다](https://icnweb.kr/wp-content/uploads/2026/01/memory-market-3player-1024web.png)

![[심층기획] 클라우드를 넘어 ‘현장’으로… 인텔, 산업용 엣지 AI의 판을 흔들다 [심층기획] 클라우드를 넘어 ‘현장’으로… 인텔, 산업용 엣지 AI의 판을 흔들다](https://icnweb.kr/wp-content/uploads/2026/01/Perplexity-image-Edge-AI-industry1b-700web.png)

![[피플] “생성형 AI 넘어 ‘피지컬 AI’의 시대로… 2026 하노버메세, 제조 혁신의 해법 제시” [피플] “생성형 AI 넘어 ‘피지컬 AI’의 시대로… 2026 하노버메세, 제조 혁신의 해법 제시”](https://icnweb.kr/wp-content/uploads/2026/02/R41_0775-HM26-von-press-900web.png)

![[이슈] 스마트 제조의 방패 ‘IEC 62443’, 글로벌 산업 보안의 표준으로 우뚝 [이슈] 스마트 제조의 방패 ‘IEC 62443’, 글로벌 산업 보안의 표준으로 우뚝](https://icnweb.kr/wp-content/uploads/2025/07/OT-security-at-automotive-by-Gemini-Veo-1024x582.png)

![[기자칼럼] 제어반의 다이어트, ‘워크로드 컨버전스’가 답이다… 엔지니어를 위한 실전 팁 7가지 [기자칼럼] 제어반의 다이어트, ‘워크로드 컨버전스’가 답이다… 엔지니어를 위한 실전 팁 7가지](https://icnweb.kr/wp-content/uploads/2026/01/generated-edge-AI-4-in-1-01-1024web.png)

![[그래프] 국회의원 선거 결과 정당별 의석수 (19대-22대) 대한민국 국회의원 선거 결과(정당별 의석 수)](https://icnweb.kr/wp-content/uploads/2025/04/main-image-vote-flo-web-2-324x160.jpg)